Project Overview

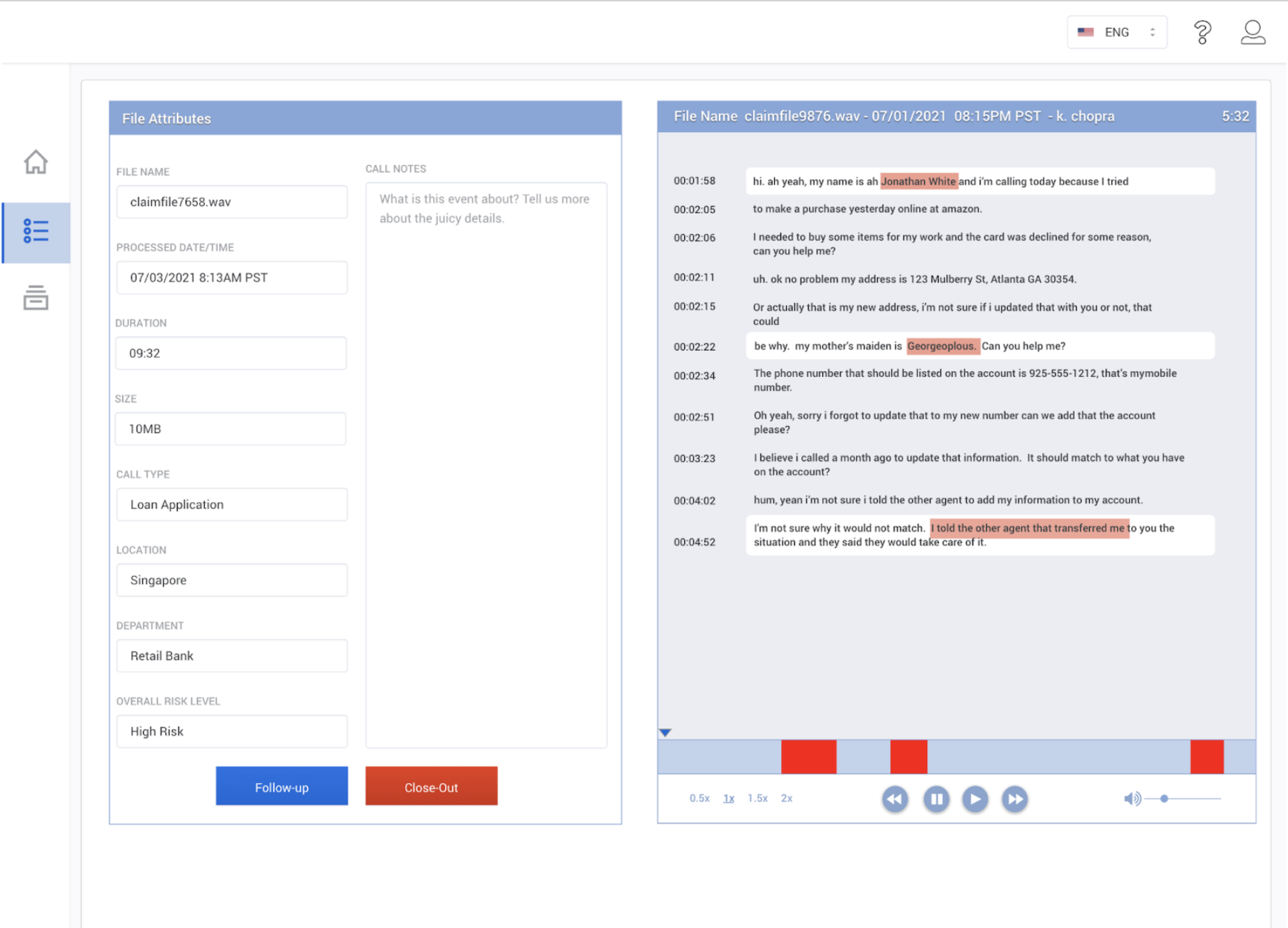

The product team aimed to introduce a new feature that identifies and highlights potential deception within agent-client conversations. This feature would analyze spoken dialogue and provide deception hints alongside the transcription. I was tasked with redesigning two existing wireframes to enhance the usability and learnability of this functionality, prioritizing improvements to the transcription screen.

Enhancing User Experience in Voice Analytics: Deception Detection

Client: Voice Analytics Startup

Industry: Voice Analytics

Project Duration: 10 Days

My Role: Market Research, Prototype Design, Wireframe Redesign

Company Size: 11-50 employees

Challenges

Tight Timeline: Only 10 days to deliver a high-quality wireframe redesign.

No Direct User Access: As a short-term contractor working on a new feature, I had no access to user data or direct user interviews.

Complex Feature: The transcription screen, which required significant attention, integrates audio playback with deception flagging, demanding intuitive design.

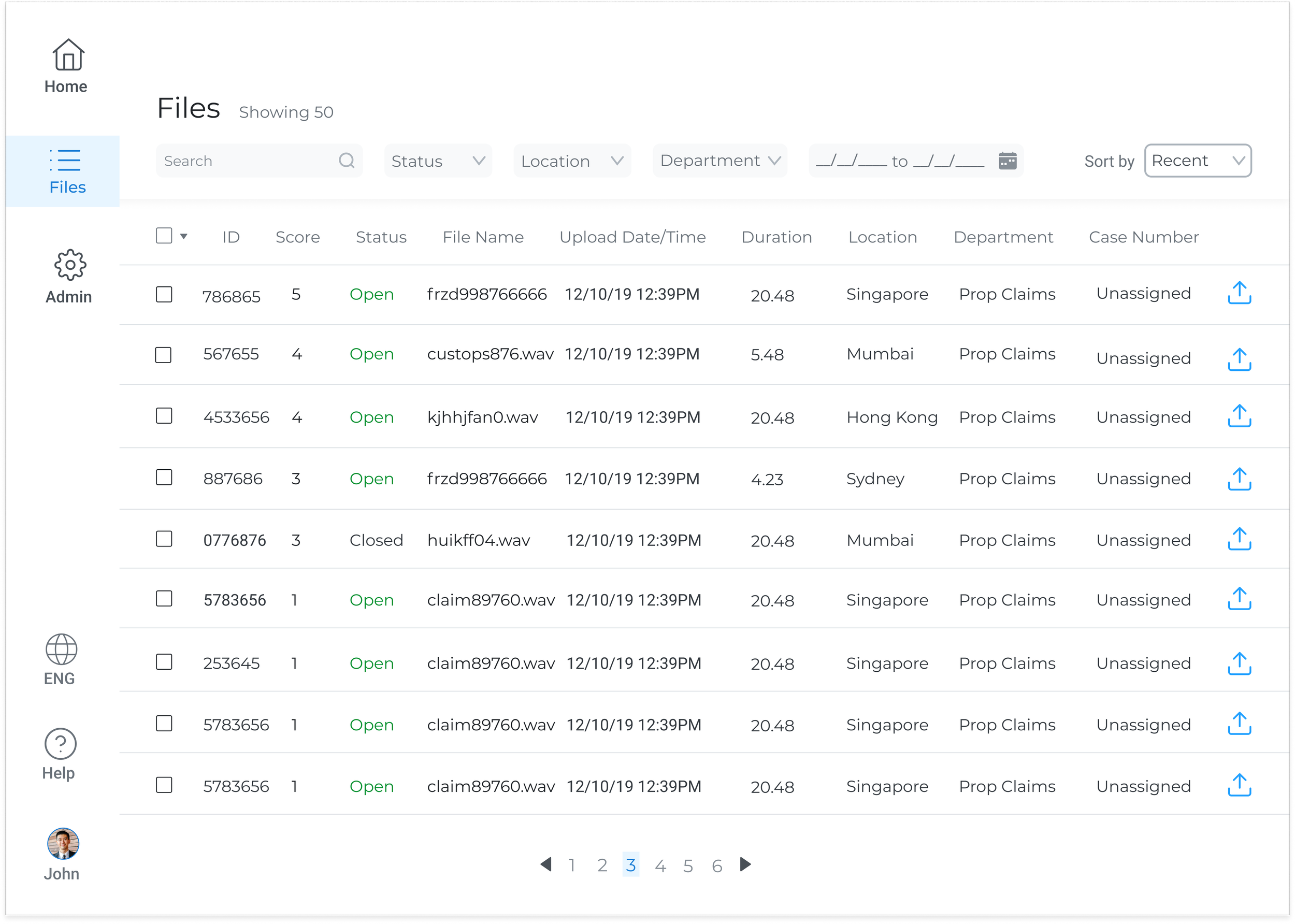

Table View

Audio and Transcriptions (Highest priority)

User Research & Ideation

To compensate for the lack of direct user data, I conducted informal interviews with users of similar products to uncover critical workflows:

Analysts frequently toggle between audio and transcription, often at increased playback speed (~1.25x).

They focus their analysis on flagged segments indicating potential deception.

Taking notes directly on cases is common to support decision-making.

Visual benchmarking of audio/video streaming platforms revealed best practices for player placement and navigation flow, influencing my design approach to surface the audio controls prominently and streamline transcription interaction.

Design Iterations

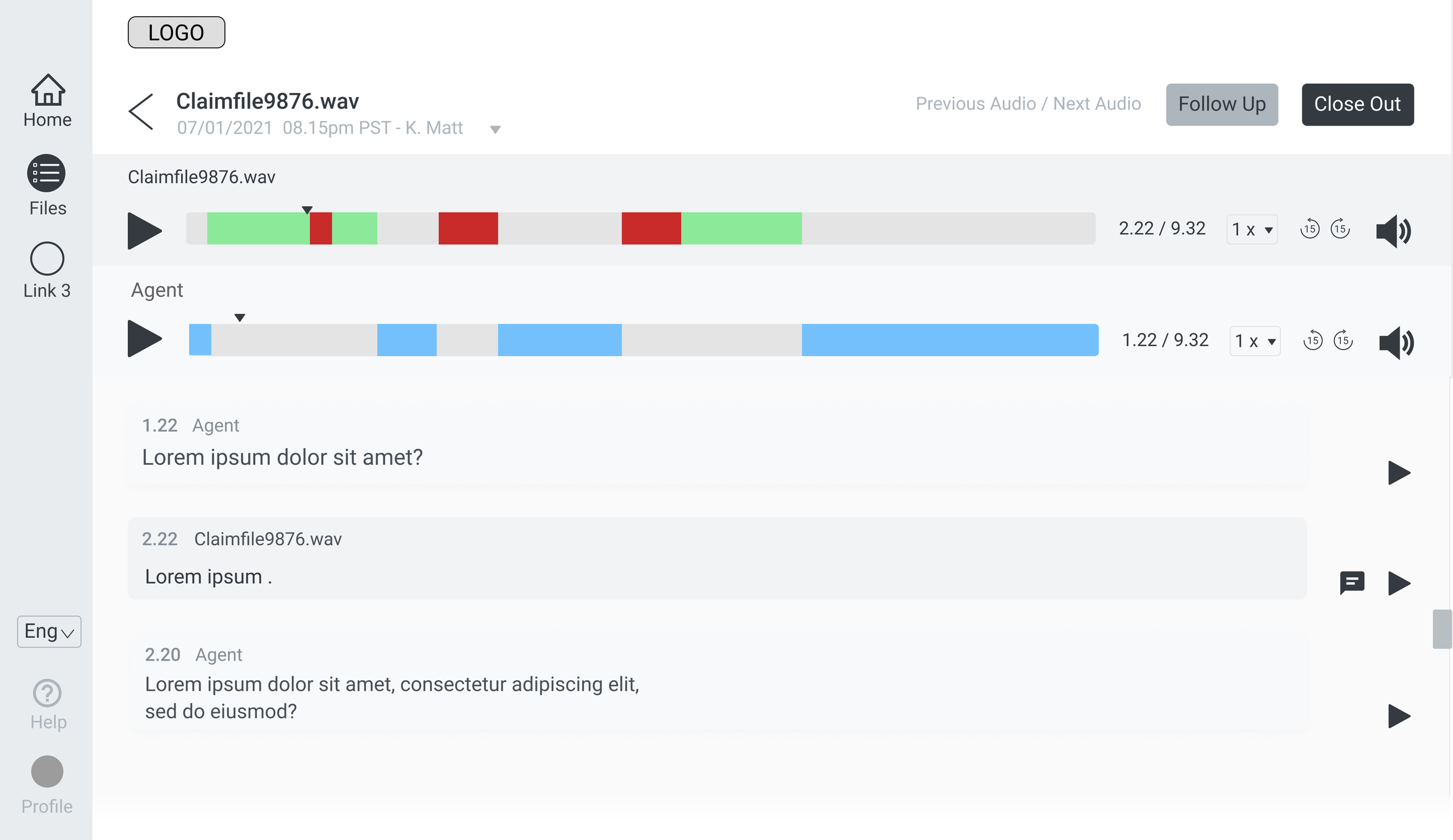

Iteration 1

Moved audio playback controls to the top of the screen to align with natural eye movement and promote ease of use.

Introduced separate audio tracks for agent and claimant calls, recognizing the dual source nature of conversations.

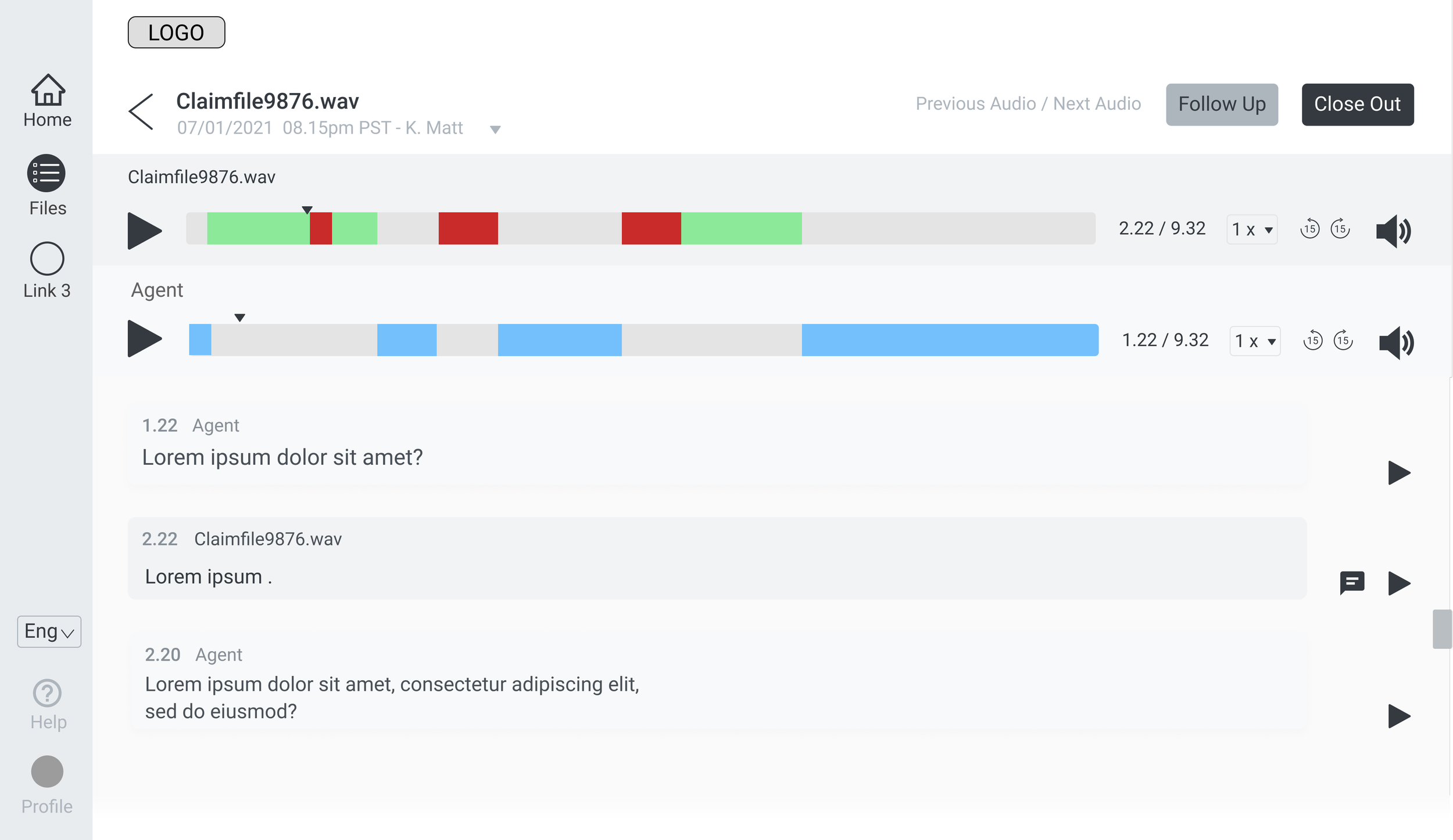

Iteration 2

Shifted from displaying full transcripts to highlighting only deception-flagged segments, reducing cognitive load.

Added timestamp and speaker identifiers for clarity.

Table viewAudio & transcriptionsMid-fidelity Prototype - Iteration oneMid-fidelity Prototype - Iteration twoFinal Prototype

Introduced a commenting feature enabling analysts to annotate cases inline for future reference.

Refined layout for clear hierarchy between audio controls, transcripts, and notes.

Audio & transcriptions - Comment feature

Impact

Enhanced feature usability by aligning design to analyst workflows, improving task efficiency and focus.

Reduced information overload through targeted transcript display, accelerating deception detection.

Improved stakeholder confidence with a polished, data-informed design ready for implementation.

Reflection

This project highlighted the vital role of user research, even informal channels, in guiding AI product design under strict constraints. It reinforced the value of clear articulation of design decisions when navigating stakeholder collaboration. Additionally, it deepened my expertise in designing interfaces that balance complex data with streamlined user experiences in AI-powered tools.